House lawmakers say existing regulatory powers can police generative AI abuse

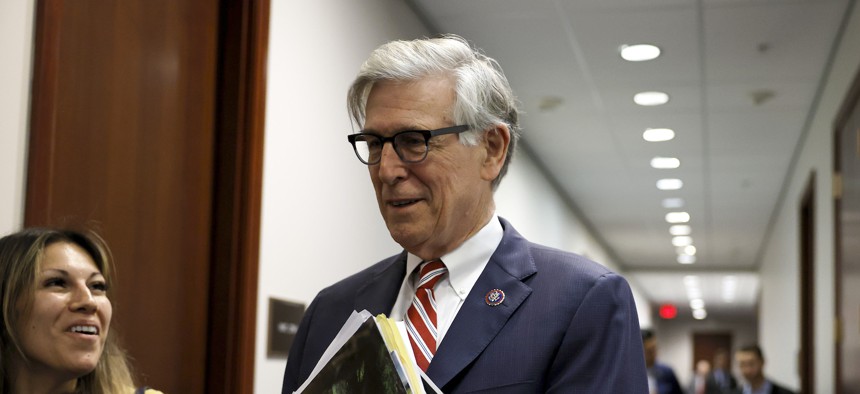

Rep. Don Beyer, D-Va., shown here at the U.S. Capitol in May 2023, says he's not interested in "setting up a big new federal bureaucracy" to police generative AI. Anna Moneymaker/Getty Images

Reps. Marcus Molinaro and Don Beyer discussed how legislative action – on a state and federal level – is needed to continue to police new generative artificial intelligence usage.

Two House lawmakers said existing regulatory agencies currently offer guardrails against threats posed by increasingly advanced generative artificial intelligence tools, but noted there's a role to be played by states and by Congress. AI’s Reps. Marcus Molinaro, R-N.Y.; and Don Beyer, D-Va., speaking at a Washington Post Live event on Tuesday, noted that AI tools are already having an impact on election security and data privacy.

Molinaro specifically referenced the Federal Trade Commission’s resolution granting itself the authority to investigate AI software vendors via civil investigative demands.

“I do think that the threat of and the consideration of using the FTC to at least hold somebody to account is necessary, but where we're absent is broad congressional action,” said Molinaro. “There needs to be the legislative function.”

Through formal congressional action, Molinaro says that agencies can have a more defined function and role in policing AI software. Beyer added that the Federal Communications Commission is also equipped with the ability to regulate AI products.

“We have the resources right now the FCC to do the regulation at the end use case, rather than setting up a big new federal bureaucracy to do it, which we're not excited about,” Beyer said.

Molinaro and Beyer also touched on the immediate threats unregulated AI can pose on society, mainly in relation to the 2024 presidential elections. Molinaro said that state governments are on the frontlines of regulating how AI and its potential disinformation can be quelled, and can act more nimbly than the federal government.

“States being the laboratories of democracy, they can act a little bit more quickly…and some are, both through their boards or organizations of elections are through state legislation,” he said. “But this is a space that we certainly have to come to some formal agreement on because again, it is about protecting democracy.”