Watchdog: 10 Government Agencies Deployed Clearview AI Facial Recognition Tech

andreusK/iStock.com

A Government Accountability Office report details how agencies used different versions of the biometric technology during the pandemic and protests.

Multiple federal agencies that employ law enforcement personnel used facial recognition technology designed and owned by non-government entities in recent years—and 10 deployed systems made by the controversial company, Clearview AI.

In a 92-page report addressed to Congress and publicly released Tuesday, the Government Accountability Office offers details on a range of government implementations of the biometric technology. GAO Director for Homeland Security and Justice Gretta Goodwin confirmed that Reps. Jerry Nadler, D-N.Y., and Carolyn Maloney, D-N.Y., and Sens. Cory Booker, D-N.J., Chris Coons, D-Del., Edward Markey, D-Mass., and Ron Wyden D-Ore., asked GAO to steer the study.

“A goal of this project was to provide the ‘lay of the land’ in terms of federal law enforcement’s use of facial recognition technology,” she told Nextgov Tuesday.

But GAO found 13 of 42 agencies surveyed do not have a firm grasp of all the non-government facial recognition tools their teams use and therefore cannot fully assess accompanying dangers.

“By implementing a mechanism to track what non-federal systems are used by employees, agencies will have better visibility into the technologies they rely upon to conduct criminal investigations,” officials recommended in the report. “In addition, by assessing the risks of using these systems, including privacy and accuracy-related risks, agencies will be better positioned to mitigate any risks to themselves and the public.”

The report is a public version of a more sensitive document sent to Congress members in early April. But the June-dated document “omits sensitive information about agency ownership and use of facial recognition technology,” according to GAO.

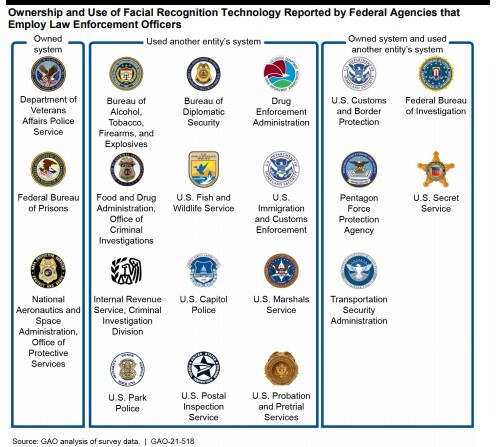

For the study, the watchdog sent a survey to those 42 federal law enforcement-aligned agencies, interviewed agency officials, and reviewed documents from some organizations. Specifically, GAO examined the ownership and use of facial recognition technology by those entities, the types of activities they use the technology for, and how they track using systems owned by those outside the government.

GAO’s audit unfolded from August 2019 to June 2020, Goodwin said.

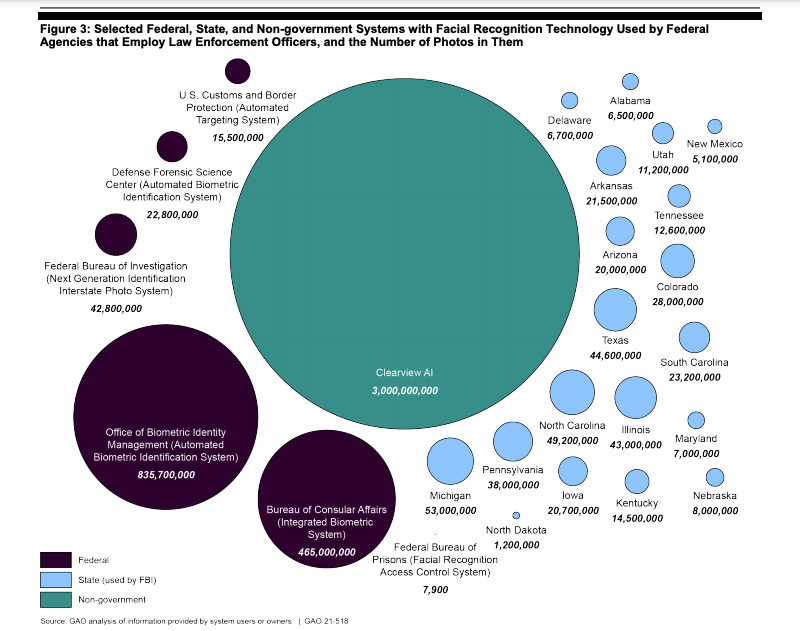

According to the public document, 20 of the 42 agencies that participated confirmed that they owned a system with facial recognition technology or used another owned by an external entity during a specific period. The systems could “include hundreds of millions or billions of photos of various types,” GAO wrote. In addition, they detailed an array of federally-built options and shed light on who deploys what.

The Customs and Border Protection, Marshals Service, and Bureau of Alcohol, Tobacco, Firearms and Explosives, for example, reported using systems owned by other federal entities; state, local, tribal or territorial entities; Vigilant Solutions; Clearview AI and other non-government providers during the time GAO examined. U.S.-based facial recognition company Clearview AI has spurred concerns about privacy and anonymity and faced lawsuits and legal complaints around its data collection and practices.

GAO’s review included a visual chart demonstrating facial recognition technology systems used by those federal law enforcement agencies and the number of photos in each system. Clearview AI’s system outnumbers the rest, encompassing more than 3 billion photos.

“In Figure 3, we were hoping the visual would provide readers a greater understanding of how systems that federal agencies use can have different numbers of photos,” Goodwin said.

The FBI, Secret Service, and Park Police were among other agencies to use Clearview’s technology during the period GAO reviewed. But agencies also leveraged different types of face-reading technology across use cases after those dates—and particularly in times of national tension.

“Following the death of George Floyd while in the custody of the Minneapolis, Minnesota police department on May 25, 2020, nationwide civil unrest, riots, and protests occurred,” GAO wrote. “Six agencies told us that they used images from these events to conduct facial recognition searches during May through August 2020 to assist with criminal investigations.”

Those agencies included ATF, the Capitol Police, FBI, Marshals Service, Park Police, and U.S. Postal Inspection Service. Of them, the USPIS accessed Clearview AI to identify individuals “suspected of criminal activity that took place in conjunction with the period” of protests. Three agencies—the Bureau of Diplomatic Security, Capitol Police, and Customs and Border Patrol—also reported using facial recognition “with regard to the January 6, 2021 events at the U.S. Capitol complex,” GAO confirmed. According to the watchdog, the Capitol Police used Clearview AI to “help generate investigative leads.”

Agencies also outlined using various public- and private-sector-owned facial recognition tools to support research and development projects, surveillance, traveler verification, COVID-19 response and more.

GAO further found 13 agencies that confirmed their use of non-federal systems had incomplete information around exactly what was being deployed and lacked the means to effectively track it. As a result, the watchdog presented two recommendations to each of those 13 federal agencies to implement a mechanism to track what systems insiders use and ultimately assess the risks of using these systems. All but one agency concurred with the suggestions.

“One thing of note that’s in the podcast that accompanies the report: ‘There are innumerable ways in which facial recognition technology might be abused,’” Goodwin said. “‘That is why it truly is very important that federal agencies know what non-federal systems are used by their employees and assess the risks associated with using these systems.’”