This Year the World Woke Up to the Problems with AI Everywhere

Zapp2Photo/Shutterstock.com

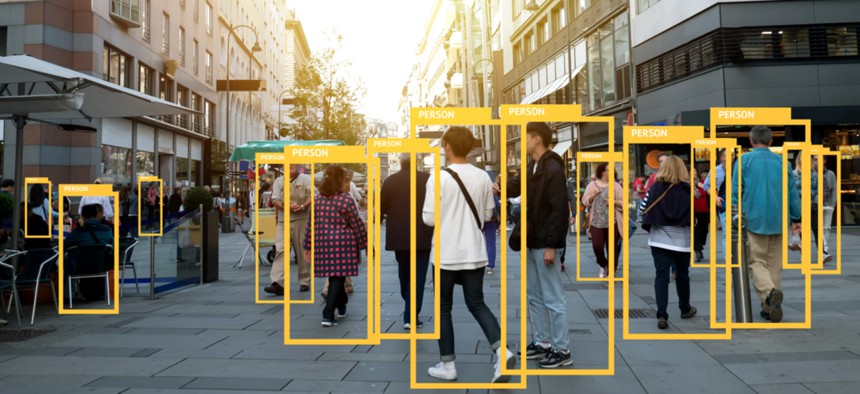

Bias is just one of the problems.

For all the money AI has made for big companies like Google and Facebook, this year companies have woken up to some of the pitfalls of the technology: It can easily become biased, there’s no set code of ethics for the technology, and putting research into the real world too soon can cost lives.

While much of this catalyzed around Uber’s self-driving car fatality, when a research vehicle struck and killed a pedestrian in March, research showing racial and gender disparities in facial recognition, as well as reports highlighting the potential misuses of artificial intelligence have also brought new attention to the issues.

Here are a few of the key events in 2018 highlighting the problems with modern AI:

February: Research and hearings

- Speakers at a February USCongressional hearing on artificial intelligence warn of AI’s longstanding difficulty with bias, especially towards people of color.

- A February report from experts in industry and academia highlighted the myriad ways AI could be weaponized and used in digital, physical, and political domains.

- Also in February, researchers Joy Buolamwini and Timnit Gebru release Gender Shades, a paper showing huge disparities in facial recognition accuracy between white men and women of color.

March: Uber’s nightmare

- In March, Uber’s experimental self-driving car kills a pedestrian in Tempe, Arizona.

May: The AI fairness tools begin

- Facebook announces its tool for identifying biases in data, called Fairness Flow, and begins testing it for hiring algorithms.

September: More tools, and Congressional letters

- Google releases its tools for identifying biases in data.

- IBM launches its own tool for combating bias.

- Members of Congress send letters to federal agencies like the FBI and the Equal Employment Opportunity Commission to see if they had tools or policies for mitigating bias.

October: Amazon combats reports of biased AI

- A Reuters report in October says Amazon tested a recruiting tool that was biased against women.

December: Industry and government show a way ahead

- Microsoft executives pushed for regulation of bias in facial recognition algorithms.

- Experts say the social sciences some AI is based on isn’t as solid as being advertised, and suggest ways to regulate the technology.

- Google Translate claims to have fixed its biased gender pronouns in translations.

- The EU publishes a draft of ethical guidelines for AI, coupled with a goal of $20 billion in AI investments.

The AI industry is still booming in Silicon Valley and beyond, but 2018 was a turning point where lawmakers and industry groups started ask less about “the singularity” and Terminator doomsday scenarios, and instead realized the potential harms that careless AI implementation could wreak in government and the private sector. This means that instead of the mentality of quickly turning AI research into AI products may well be met with greater resistance in the future, as the public has a greater understanding of AI’s potential shortcomings due to bias or a lack of ethical design.

Rumman Chowdhury, Accenture’s lead for responsible AI, told Quartz that this change is happening in industry as well—it’s just another part of a company’s business plan.

“We were finding that 25 percent of companies were having to do a complete overhaul of their system at least once a month because of inconsistent outcomes or bias, or they were just unsure. I’m calling that ethical debt,” she said. “If you don’t build ethics in from the ground up, it’s just like cybersecurity, you’re going to spend time later trying to patch up a fix. It’s much easier to do the work up front.”