How to Inoculate the Public Against Fake News

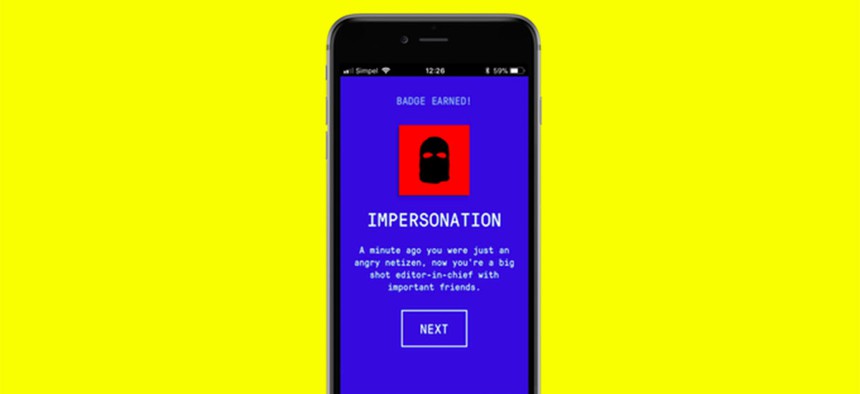

The Fake News Game simulates propaganda tactics such as impersonation, and awards badges once a round is completed. Drog/www.fakenewsgame.org

When people were given a toolbox of deceptive techniques and told to “play Russian troll,” they learned to reject disinformation.

Friday’s indictment of 13 Russians on charges of attempting to defraud the public and influence the U.S. presidential election shows that that the United States remains vulnerable to what the indictment calls “information warfare” but what others call simply fake news. A new research paper released Monday from two Cambridge University researchers shows how to counteract it.

Once a person accepts an untruth, dislodging it can be very difficult, especially if the person’s online social group is pushing that piece of fake news. Some research has suggested that this is because the emotional need for acceptance goes on to influence higher-order rational thinking.

A broad cross-section of research suggests that the best defense against misinformation is to inoculate people against it — that is, by exposing people to the precise fake news they are likely to be targeted with. This is why Emmanuel Macron’s 2017 French presidential campaign set up honeypot email accounts to attract the Russian hackers backing Putin ally Marine Le Pen (a fact Defense One broke in December, 2016). When Macron’s emails leaked and Russian-linked social media accountsbegan to spread news of the leaks—and disinformation about their contents—the Macron campaign was able to easily discredit the efforts. The operation backfired.

“Inoculation is the key to countering disinformation,” said George Mason University researcher John Cook, whose work on inoculation theory was cited by the Cambridge paper. “There are a number of ways to approach inoculating people against disinformation. For example, general inoculation warns people against the general threat that they might be misinformed. However, this can potentially backfire by making people cynical about all forms of information, eroding belief in facts as well as falsehoods.” When examining misinformation used in the climate change debate, Cook found “that more targeted inoculations don’t reduce trust in scientists, indicating that we need to be more strategic in how we counter disinformation.”

But most of the time, it’s difficult or impossible to anticipate precisely what sort of misinformation or lies an adversary might spread. Sometimes, it’s unknown even to that very adversary.

Consider the Internet Research Agency, or IRA, the group targeted by the FBI in its Friday indictment. The Washington Post,who talked to former IRA employees, found that the trolls didn’t so much follow scripts as themes as they produced content, events, blog posts, and chatroom comments. They created content continuously and extemporaneously, in a process of never-ending experimentation.

One former employee described how “he and his colleagues would engage in a group troll in which they would pretend to hold different views of the same subject and argue about it in public online comments. Eventually, one of the group would declare he had been convinced by the others. ‘Those are the kinds of plays we had to act out,’ he said.”

Different workers had different assignments, and, as the indictments note, assumed highly different personas to spread misinformation.

So how do you warn potential victims about what fake news they might see when the enemy, at least some of the time, is improvising?

The Cambridge researchers developed a game to help people understand, broadly, how fake news works by having users play trolls and create misinformation. By “placing news consumers in the shoes of (fake) news producers, they are not merely exposed to small portions of misinformation,” the researchers write in their accompanying paper.

In the game, players assumed various roles, somewhat like the St. Petersburg troll factory. Among the roles was “denier”: someone “who strives to make a topic look small and insignificant,” such as people who claim that the Russian efforts to undermine governments around the world are either made up or insignificant. Another was “conspiracy theorist”: someone “who distrusts any kind of official mainstream narrative and wants their audience to follow suit;” such as a trafficker in fictions such as Pizzagate.

Most importantly, the players had to use common tactics in misinformation and rhetoric, such as hyperbole, or exaggeration; whataboutism, or discrediting news or individuals via accusations of hypocrisy; and “conspiratorial reasoning,” defined as “theorizing that small groups are working in secret against the common good,” which tracks with the increasingly popular and yet still baseless theory that the FBI is using the Russian investigation to stage a coup.

Did the game successfully inoculate players against fake news? The researchers had 57 people play the game, adopting different personas and using different tactics associated with misinformation and persuasion. As compared to a control group, the players were much more skeptical of fake news articles they were subsequently shown. And this remained true no matter what the player’s political leanings.

“We asked participants — both in the control and treatment group— how reliable they judged their [counterfactual] article to be,” said researcher Jon Roozenbeek. “We also asked them how persuasive they thought it was. Participants could answer on a scale from 1 to 7, and what we found is that the treatment group rated their article significantly less reliable and persuasive vis-à-vis the control group. This is, of course, an imperfect measure. Unfortunately, our pilot study gave us no opportunity to explore these questions in a deeper manner. But we will keep running studies like this in the future, so more data should be coming up.”

The study suggests that there may actually be multiple methods for countering disinformation, propaganda, and fake news from governments like Vladimir Putin’s.

Said Cook of the Cambridge paper: “Roozenbeek and van der Linden propose an exciting novel approach: ‘active inoculation’, by having students learn the techniques of fake news by creating their own fake news article. This active approach gives students the critical thinking tools to see through other fake news articles: a sorely needed skill these days.”

He said, “Another way to inoculate people against misinformation is through ‘technocognition’: technological solutions based on psychological principles. The ‘fake news game’ is potentially a powerful technocognition solution, as the concept could work well as an online game where any number of players can actively learn how to see through the techniques of fake news.”

Whether political leaders or social networks to use such strategies, or even advocate for their use, remains very much in question.