This Startup’s Racial-Profiling Algorithm Shows AI Can Be Dangerous Way Before Any Robot Apocalypse

Beros919/Shutterstock.com

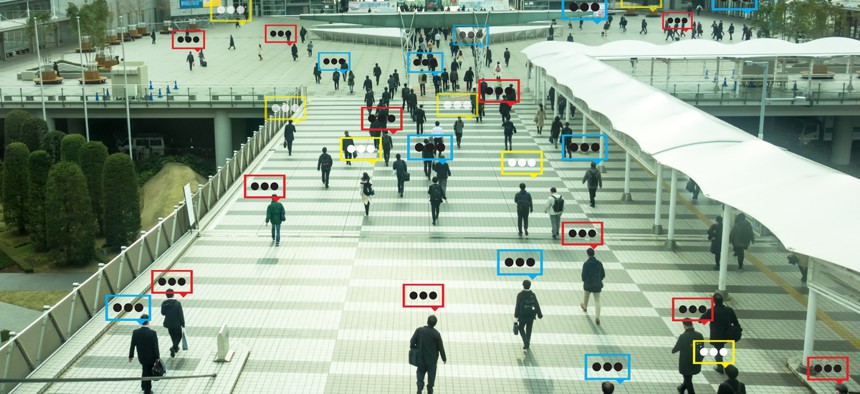

The new algorithm which promises to accurately look at images of people and determine their ethnic background.

The biggest danger AI poses today isn’t the potential of killer robots or Roko’s Basilisk—it’s the potential to scale bias and racism to the size of the internet.

The latest example of this is an “ethnicity detection” algorithm marketed by Moscow-based NtechLab as an “upcoming feature” to the facial recognition technology it sells. The new algorithm which promises to accurately look at images of people and determine their ethnic background; an image that was on the site, but has since been removed due to public backlash, showed classifications like “European,” “African,” and “Arabic.” While the image has been removed, ethnicity recognition is still listed as an upcoming product on the NtechLab site.

Privacy advocates like the American Civil Liberties Union already decry the use of facial-recognition AI in most cases, making the case that widespread adoption of the technology would mean we would live under constant surveillance by police or large tech companies. Ethnicity recognition is a step even further past the ACLU’s line in the sand. Making it far easier for anyone with access to surveillance cameras, whether it be police or private entities like airlines or sporting arenas, to flag people based on ethnicity, would encourage overt racial profiling without even the guise of assisting police investigations.

NtechLab might be getting the most public scrutiny, but they’re not the first to market “ethnicity detection” AI. Clarifai, a startup founded by former ImageNet competition-winner Matthew Zeiler, started selling use of a similar technology through an API that identifies “multicultural appearance” in April 2017. In a blog post published when the feature (called “Demographics”) was launched, Zeiler wrote that the company acknowledges the tool could be used maliciously, but believes people on the internet will generally use it for good.

“Ultimately, it’s about intent and we have a lot of faith in humanity,” he wrote. “It’s not our business to limit what developers create and we choose to believe that most people will use our Demographics for good—like bringing attention to female representation in tech or bringing an end to human trafficking.”

Sure.

NEXT STORY: The Future of AI Depends on High-School Girls