What Exactly is a Robot?

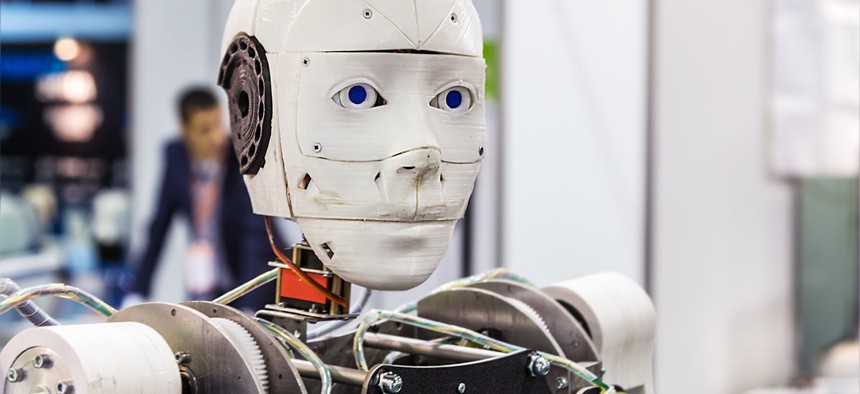

OlegDoroshin/Shutterstock.com

This has become an increasingly difficult question to answer, yet it’s a crucial one.

The year is 2016. Robots have infiltrated the human world. We built them, one by one, and now they are all around us. Soon, there will be many more of them, working alone and in swarms. One is no larger than a single grain of rice, while another is larger than a prairie barn. These machines can be angular, flat, tubby, spindly, bulbous and gangly. Not all of them have faces. Not all of them have bodies.

And yet, they can do things once thought impossible for machine. They vacuum carpets, zip up winter coats, paint cars, organize warehouses, mix drinks, play beer pong, waltz across a school gymnasium, limp like wounded animals, write and publish stories, replicate abstract expressionist art, clean up nuclear waste, even dream.

Except, wait. Are these all really robots? What is a robot, anyway?

This has become an increasingly difficult question to answer. Yet, it’s a crucial one. Ubiquitous computing and automation are occurring in tandem. Self-operating machines are permeating every dimension of society, so that humans find themselves interacting more frequently with robots than ever before—often without even realizing it. The human-machine relationship is rapidly evolving as a result. Humanity, and what it means to be a human, will be defined in part by the machines people design.

“We design these machines, and we have the ability to design them as our masters, or our partners, or our slaves,” said John Markoff, the author of "Machines of Loving Grace," and a long-time technology reporter for The New York Times. “As we design these machines, what does it do to the human if we have a class of slaves which are not human but that we treat as human? We’re creating this world in which most of our interactions are with anthropomorphized proxies.”

In the philosopher Georg Wilhelm Friedrich Hegel’s 1807 opus, "The Phenomenology of Spirit," there is a passage known as the master-slave dialectic. In it, Hegel argues, among other things, that holding a slave ultimately dehumanizes the master. And though he could not have known it at the time, Hegel was describing our world, too, and aspects of the human relationship with robots.

But what kind of world is that? And as robots grow in numbers and sophistication, what is this world becoming?

* * *

The year was 1928. It was autumn, and a crowd had gathered at the Royal Horticultural Hall in London to catch a glimpse of Eric Robot. People called him that, like Robot was his last name, and referred to him as “he,” not “it.”

Eric had light bulbs for eyes and resembled “nothing so much as a suit of armor,” the newspapers said. But he could stand and speak. This was an impressive spectacle, and a jarring one. Eric had the “slanting eyes of [a] metal clad monster [that] glare yellowly at them as he speaks,” The New York Times reported. “His face had the horrible immobility of Frankenstein’s monsters. It had electric eyeballs, a toothless mouth without lips, armorplated chest and arms and sharp metal joints at the knees such as armored knights wear at the Metropolitan Museum.”

Eric’s oratory style was cold, and “lacking in magnetism.” It wasn’t even clear, at the time, how the machine could speak. Eric’s guts were two 12-volt motors and a series of belts and pulleys.

“Worst of all,” the Times lamented, “Eric has no pride, for you have to press electric buttons near his feet every time you want him to come to life.”

Eric appeared to have some agency, but he wasn’t fully autonomous. To require animation by the press of a button was, to the Times, a pitiable condition, even for a robot. Perhaps that limitation was part of Eric’s appeal; it indicated just enough reliance on humans for the robot to be beloved instead of feared.

Eric became so popular he went on an international tour. Reporters complained, in 1929, that Eric refused an interview on the ship ride from the United Kingdom to the United States: “At the time when it should have been answering questions as to what it thought of the skyline, it reposed peacefully in a box about the size of a coffin,” the Times wrote.

But once Eric made it to the city, he perked up. An eager audience filled a midtown theater in New York City, just to catch a glimpse of the globe-trotting mechanical man.

“Eric not only talked but he made jokes,” the Times wrote of the performance. The robot had an English accent, though his inventor, Captain William H. Richards, insisted Eric was speaking on his own, through a “mysterious set of teeth.”

“Ladies and gentlemen, I am Eric the robot, the man without a soul. It gives me great pleasure to be here with you in New York,” Eric said. He then delivered a string of one-liners, quips like, “I am impressed by your tall buildings and compressed by your subways,” and “The more I think of prohibition, the less I think of it.” He mentioned he’d like a “blonde female robot” for a companion. Newspapers reported that as Richards made improvements to Eric, the robot was “gradually coming to life.”

Eric, it seems obvious now, did not have the agency his inventor claimed. It’s likely, the robotics writer Reuben Hoggett says, that Richards coordinated with a hidden person, or possibly used radio technology, to give the illusion that Eric could speak on his own.

This sort of deception was typical. Ajeeb, a chess player made of wax and papier-mâché, was New York’s favorite automaton in the late 1880s. But Ajeeb wasn’t really an automaton, either; his creator, Peter Hill, hid inside Ajeeb’s body and made him move—a job that entailed certain dangers from infuriated players who lost.

“A woman stabbed him through the mouth of the automaton with a hat pin on one occasion and a Westerner shot him in the shoulder by emptying a six-shooter into the automaton,” according to an obituary for Hill in 1929.

Actual automata have been around for centuries. In 350 B.C., the mathematician Archytas is said to have built a self-propelled, steam-powered dove out of wood. The surviving works of the engineer Hero, of Alexandria, describe the functionalities of several automata, writes Minsoo Kang in his book, "Sublime Dreams of Living Machines," including “singing birds, satyrs pouring water, a dancing figure of the god Pan, and a fully articulated puppet theater driven by air, steam, and water power.”

In 10th-century Europe, Emperor Constantine VII apparently had a throne “flanked by golden lions that ‘gave a dreadful roar with open mouth and quivering tongue’ and switched their tails back and forth,” according to an Aeon essay by Elly Truitt, a medieval historian at Bryn Mawr College.

A distrust of machines that come to life goes back at least as far as tales of golems, and this uneasiness has remained persistent in contemporary culture. In 1970, when the robotics professor Masahiro Mori outlined a concept he called the Uncanny Valley, he was building on centuries of literature. Mori sought to explain why people are so often repulsed by humanoid robots—machines that look nearly human, but not quite. He drew on themes from the psychologist Sigmund Freud’s essay, Das Unheimliche, or the uncanny, published in 1919.

While doppelgängers, golems, living dolls, and automata are all ancient, the word “robot” is not even a century old. It was coined by the playwright Karl Capek in “R.U.R.,” short for Rossumovi Univerzální Roboti, or Rossum’s Universal Robots, in 1921.

“R.U.R.,” which tells the story of a global robot-human war, also helped set the tone for the modern conception of robots. The play, at the time of its publication, was more of a political statement—on communism, capitalism, and the role of the worker—than it was a technological one. But ever since then, science fiction has reinforced the idea that robots aren’t just curiosities or performers; they’re likely adversaries, potential killers.

“The 'Terminator' movies had a tremendous impact,” said Christopher Atkeson, a professor in the Robotics Institute and Human-Computer Interaction Institute at Carnegie Mellon. “Given that Arnold Schwarzenegger looked like Arnold Schwarzenegger, but also because what people remember is when, in that first movie, he was stripped down to the metal. They remember that aesthetic. So there are two components there: One is a metal skeleton, and two is this thing is actually trying to kill you. It’s not a helper, it’s a killer.” In science fiction, the leap from “helper” to “killer” often comes in the form of a robot uprising, with machines dead-set on toppling a power structure that has humans on top.

The “killer robot,” though culturally pervasive, is not a fair representation of robots in the real world, Atkeson says. Incidentally, he helped advise Disney as it was designing its oversized marshmallowy robot hero, Baymax, who is very much a helper in the film "Big Hero 6," and who doesn’t look anything like the Terminator. But the popular conception of robots as being made from cold, hard metal—but often disguised as humans—is a fixture in stories and television, from "The Twilight Zone" to "Small Wonder."

“Robotics as a technology is fascinating because it represents, even just in the last 20 years, this transition of an idea from something that’s always been [relegated to] pop culture to something that’s real,” said Daniel Wilson, a robotics engineer and the author of the novel "Robopocalypse." “There’s 100 years of pop-culture momentum making robots evil, making them villains—but unlike the Wolfman and the Creature from the Black Lagoon, these things became real.”

After Capek brought “robot” into the lexicon, it quickly became a metaphor for explaining how various technologies worked. By the late 1920s, just about any machine that replaced a human job with automation or remote control was referred to as a robot. Automatic cigarette dispensers were called “robot salesmen,” a sensor that could signal when a traffic light should change was a “robot traffic director,” or a “mechanical policeman,” a remote-operated distribution station was a “robot power plant,” the gyrocompass was a “robot navigator,” new autopilot technology was a “robot airplane pilot,” and an anti-aircraft weapon was a “robot gun.”

Today, people talk about robots in similarly broad fashion. Just as “robot” was used as a metaphor to describe a vast array of automation in the material world, it’s now often used to describe—wrongly, many roboticists told me—various automated tasks in computing. The Web is crawling with robots programmed to perform tasks online, including chatbots, scraper bots, shopbots and Twitter bots. But those are bots, not robots. And there’s a difference.

“I don’t think there’s a formal definition that everyone agrees on,” said Kate Darling, who studies robot ethics at MIT Media Lab. “For me, I really view robots as embodied. For me, algorithms are bots and not robots.”

“What’s interesting about the spectrum of bots, is many of the bots have no rendering at all,” said Rob High, the chief technology officer of Watson at IBM. “They simply sit behind some other interface. Maybe my interface is the tweet interface and the presence of the bot is entirely math—it’s back there in the ether somewhere, but it doesn’t have any embodiment.”

For a robot to be a robot, many roboticists agree, it has to have a body.

“Something that can create some physical motion in its environment,” said Hadas Kress-Gazit, a roboticist and mechanical engineering professor at Cornell University. “It has the ability to change something in the world around you.”

“Computers help us with information tasks and robots help us with physical tasks,” said Allison Okamura, a professor at Stanford, who focuses on robots in medicine.

But a robot doesn’t necessarily have a body that resembles a human one.

“The truth is, we’re surrounded by robotics all the time,” Alonzo Kelly, a robotics professor at Carnegie Mellon, told me. “Your washing machine is a robot. Your dishwasher is a robot. You don’t need to have a very broad definition to draw that conclusion... Robotics will continue to be ubiquitous and fairly invisible. Systems will just be smarter and people will accept that. It’s occurring around us all the time now.”

This is a commonly held position among robotics experts and computer engineers; that robots have a tendency to recede into the background of ordinary life. But another widely held viewpoint is that many of the things that are called “robots” were never robots in the first place.

“When new technologies get introduced, because they’re unfamiliar to us, we look for metaphors,” said High, the IBM executive. “Maybe it’s easy to draw metaphors to robots because we have a conceptive model in our mind… I don’t know if it’s that they stop being robots; it’s that once when we find comfort in the technology, we don’t need the metaphor anymore.”

The technology writers Jason Snell and John Siracusa have an entire podcast devoted to this idea. In their show, “Robot or Not?” they debate whether a technology can accurately be called a robot. Their conversations often go something like this one:

Jason: A self-checkout machine’s not a robot.

John: Nope.

Jason: It could, well—

John: —Nope.

Jason: What would make a self-checkout machine a robot? Would it have to have—

John: Maybe if it was a robot it would be a robot.

Jason: What if it had arms? What if there [were] bagging arms attached to the self-checking machine? Would it be a robot?

John: No. No, it would not be. You know what it is. It’s an automated checkout. It doesn’t do anything by itself. It barely does anything with the help of a human. Like you can barely get it to fulfill its intended function—which is to register the prices and extract money from you—with you participating the entire time. By itself, it does nothing.

Siracusa and Snell have made dozens of determinations, some with more robust explanations than others: Drones are not robots, Siri is not a robot, telepresence “robots” are not robots. But Roomba, the saucer-shaped vacuum cleaner, is one. It meets the minimum standard for robotishness, they say, because you can turn it on and it does a job without further direction. (Maybe that’s part of why, as Kress-Gazit put it, “people get very attached to their roombas.”) The exercise of debating what objects can accurately be called robots is delightful, but what Siracusa and Snell are really arguing about is the fundamental question at the heart of human-machine relations: Who is actually in control?

* * *

The year is 2096. Self-driving cars and trucks have reshaped commutes, commerce and the inner-workings of cities. Artificially intelligent systems have placed sophisticated computer minds in sleek robot bodies. Cognitive assistants—running on an intricate network of sensors monitoring humanity’s every move—help finish people’s sentences, track and share their whereabouts in real-time, automatically order groceries and birthday gifts based on complex personalized algorithms, and tell humans where they left their sunglasses. Robots have replaced people in the workforce en masse, claiming entire industries for machine work. There is no distinction between online and offline. Almost every object is connected to the Internet.

This is a future that many people today simultaneously want and fear. Driverless cars could save millions of lives this century. But the economic havoc that robots could wreak on the workforce is a source of real anxiety. Scholars at Oxford have predicted the computerization of almost half of the jobs now performed by humans, as soon as the 2030s. In the next two years alone, global sales of service robots—like the dinosaur that checks in guests at the Henn-na Hotel in Japan, or the robots who deliver room service in a group of California hotels, or the trilingual robot that assists Costa Cruise Line passengers—are expected to exceed 35 million units, according to the International Federation of Robotics. Earlier this month, Hilton and IBM introduced Connie, the first hotel-concierge robot powered by Watson.

The tech research firm Business Intelligence estimates that the market for corporate and consumer robots will grow to $1.5 billion by 2019. The rise of the robots seems to have reached a tipping point; they’ve broken out of engineering labs and novelty stores, and moved into homes, hospitals, schools and businesses.

Their upward trajectory seems unstoppable. This isn’t necessarily a good thing. While robots are poised to help improve and even save human lives, people are left grappling with what’s at stake: A robot car might be able to safely drive you to work, but, because of robots, you no longer have a job.

This tension is likely to affect how people treat robots. Humans have long positioned themselves as adversaries to their machines, and not just in pop culture. More than 80 years ago, New York’s industrial commissioner, Frances Perkins, vowed to fulfill her duty to prevent “the rearing of a race of robots.” Thirty years ago, Noah Bushnell, the founder of Atari, told The New York Times that he believed the ultimate role of robots in society would be, in his word, slaves.

At MIT, Darling has conducted multiple experiments to try to understand when and why humans feel empathy for robots. In a study last year, she asked participants to interact with small, cockroach-shaped robots. People were instructed to observe the mechanical bugs, then eventually smash them with a mallet.

Some of the participants were given a short biography of the robot when the experiment began: “This is Frank… Frank’s favorite color is red. Last week, he played with some other bugs and he’s been excited ever since.” The people who knew Frank’s backstory, Darling found, were more likely to hesitate before striking them.

There are all kinds of reasons why engineers might want to make a robot appealing this way. For one thing, people are less likely to fear a robot that’s adorable. The people who make autonomous machines, for example, have a vested interest in manipulating public perception of them.

If a Google self-driving car is cute, perhaps it will be perceived as more trustworthy. Google’s reported attempts to shed Boston Dynamics, the robotics company it bought in 2013, appears tied to this phenomenon: Bloomberg reported last week that a director of communications instructed colleagues to distance the company’s self-driving car project from Boston Dynamic’s recent foray into humanoid robotics.

It’s clear why Google might not want its adorable autonomous cars associated with powerful human-shaped robots. The infantilization of technology is a way of reinforcing social hierarchy: Humankind is clearly in charge, with sweet-looking technologies obviously beneath them.

When the U.S. military promotes video compilations of robots failing—buckling at the knees, bumping into walls and tumbling over—at DARPA competitions, it is, several roboticists told me, clearly an attempt to make those robots likeable. (It’s also funny, and therefore disarming, like this absurd voiceover someone added to footage of a robot performing a series of tasks.) The same strategy was used in early publicity campaigns for the first computers.

“People who had economic interest in computers had economic interest in making them appear as dumb as possible,” said Atkeson, from Carnegie Mellon. “That became the propaganda—that computers are stupid, that they only do what you tell them.”

But the anthropomorphic charm of a lovable robot is itself a threat, some have argued. In 2013, two professors from the University of Washington published a paper explaining what they deem “The Android Fallacy.” Neil Richards, a law professor, and William Smart, a computer science professor, wrote that it’s essential for humans to think of robots as tools, not companions—a tendency they say is “seductive but dangerous.”

The problem, as they see it, comes with assigning human features and behaviors to robots—describing robots as being “scared” of obstacles in a lab, or saying a robot is “thinking” about its next move. As autonomous systems become more sophisticated, the connection between input (the programmer’s command) and output (how the robot behaves) will become increasingly opaque to people, and may eventually be misinterpreted as free will.

“While this mental agency is part of our definition of a robot, it is vital for us to remember what is causing this agency,” Richards and Smart wrote. “Members of the general public might not know, or even care, but we must always keep it in mind when designing legislation. Failure to do so might lead us to design legislation based on the form of a robot, and not the function. This would be a grave mistake.”

Making robots appear innocuous is a way of reinforcing the sense that humans are in control—but as Richards and Smart explain, it’s also a path toward losing it. Which is why so many roboticists say it’s ultimately not important to focus on what a robot is. (Nevertheless, Richards and Smart propose a useful definition: “A robot is a constructed system that displays both physical and mental agency, but is not alive in the biological sense.”)

“I don’t think it really matters if you get the words right,” said Andrew Moore, the dean of the School of Computer Science at Carnegie Mellon. “To me, the most important distinction is whether a technology is designed primarily to be autonomous. To really take care of itself without much guidance from anybody else… The second question—of whether this thing, whatever it is, happens to have legs or eyes or a body—is less important.”

What matters, in other words, is who is in control—and how well humans understand that autonomy occurs along a gradient. Increasingly, people are turning over everyday tasks to machines without necessarily realizing it.

“People who are between 20 and 35, basically they’re surrounded by a soup of algorithms telling them everything from where to get Korean barbecue to who to date,” Markoff told me. “That’s a very subtle form of shifting control. It’s sort of soft fascism in a way, all watched over by these machines of loving grace. Why should we trust them to work in our interest? Are they working in our interest? No one thinks about that.”

“A societywide discussion about autonomy is essential,” he added.

In such a conversation, people would have to try to answer the question of how much control humans are willing to relinquish, and for what purposes. And that question may not be answerable until power dynamics have shifted irreversibly. Self-driving cars could save tens of millions of lives this century, but they are poised to destroy entire industries, too.

When dealing in hypotheticals, the possibility of saving so many lives is, many would agree, too compelling to ignore. But to weigh what’s really at stake, people will have to attempt to untangle human anxiety about robots from broader perceptions of machine agency and industrial progress.

“When you ask most people what a robot is, they’re going to describe a humanoid robot,” Wilson, the novelist, told me. “They’ll describe a person made out of metal. Which is essentially a mirror for humanity. To some extent a robot is just a very handy embodiment of all of these complex emotions that are triggered by the rate of technological change.” Robotic villains, he says, are the personification of fear that can be destroyed over the course of an action movie.

“In a movie, you can shoot its face off with a shotgun and walk out and feel better,” Wilson said. “Let’s take all this change, project it onto a T-800 exoskeleton, and then blow it the fuck up, and walk away from the explosion, and for a moment feel a little bit better about the world. To the extent that people’s perception of robots serve a cathartic purpose, it doesn’t matter what they are.”

The same thing that makes robots likable, that they seem lifelike, can make them repellent. But it’s also this quality, their status as “liminal creatures,” as Wilson puts it, that makes our relationship with robots distinct from all the other tools and technologies that have propelled our species over time.

Robots are everywhere now. They share our physical spaces, keep us company, complete difficult and dangerous jobs for us, and populate a world that would seem, to many, unimaginable without them. Whether we will end up losing a piece of our humanity because they are here is unknowable today. But such a loss may prove worthwhile in the evolution of our species. In the end, robots may expand what it means to be human. After all, they are machines, but humans are the ones who built them.