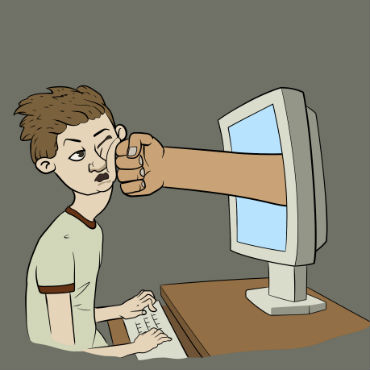

Russian cyber meddling extends well beyond elections

Fake FCC comments are yet another sign of Russia's ongoing destabilization campaign against U.S. targets, a social media expert told Congress.

A wave of fake email comments similar to the one that flooded the Federal Communications Commission's servers this past summer, tagged to Russian IP addresses, might also swamp the servers of other federal agencies in the future, as bad actors move to subvert and undermine U.S. democratic processes, a social media expert told a Senate hearing.

The intent of Russian meddling in U.S. 2016 elections and at the FCC are part of a larger effort by that country's government to attack the integrity of the federal government's systems, Clint Watts, a fellow at the Foreign Policy Research Institute, told a Jan. 17 Senate Commerce, Science and Transportation Committee hearing on social media and terrorism.

Watts, a former FBI special agent who served on the Joint Terrorism Task Force, said the goal of election meddling is not necessarily to throw an election to a specific candidate, but to cripple public confidence in elections more broadly.

Similarly, he said the influx of 500,000 comments to the FCC's public comment system about its net neutrality rules was also part of the Russian government's ongoing program to sow discontent and suspicion among U.S. citizens.

"It's a 'you can't trust the process'" approach, he said, adding that the Russian government has used similar techniques on its own population to make them apathetic and mistrustful of their own elections.

The Russian government has seen success with the low-cost program, according to Watts, which the U.S. government hasn't firmly addressed.

He predicted the program will continue in the U.S. and spread to other countries such as Mexico and Myanmar, where still-emerging technological environments mean many individuals aren't as technology literate.

Representatives from social media companies Facebook, Twitter and YouTube, meanwhile, told the committee they are increasingly implementing machine learning and artificial intelligence to detect terror recruitment and messaging on their platforms.

For instance, YouTube Director of Public Policy and Government Relations Juniper Downs told the panel that since June, her company has removed over 160,000 violent extremist videos and terminated some 30,000 channels for violation of policies against terrorist content.

As the next election looms, those companies said they are preparing to sift through some of the political ads and other data that might be steered by questionable sources.

Downs said her company is looking for more transparency and verification about who is behind certain ads that appear on the platform, as well as a transparency report that would provide more detail on that content.

Watts warned the Russian effort may be more insidious than those of other bad actors, such as terrorist groups, because the Russian agents "operate within the rules" of the platforms and don't use the inflammatory language and terms that AI and machine learning systems are trained to discern.