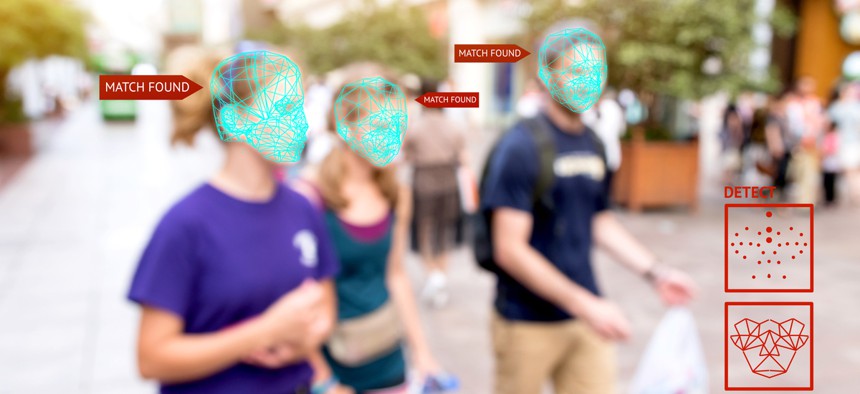

Facial Recognition Is Here to Stay. And We Should All Probably Accept It

Zapp2Photo/Shutterstock.com

The public can still push for transparency and pressure for ethical guidelines to be set in place.

For the past few years, the world’s biggest tech companies have been on a mission to put artificial-intelligence tools in the hands of every coder. The benefits are clear: Coders familiar with free AI frameworks from Google, Amazon, Microsoft, or Facebook might be more inclined to someday work for one of those talent-starved companies. Even if they don’t, selling pre-built AI tools to other companies has become big business for Google, Amazon, and Microsoft.

Today these same companies are under fire from their employees over who this technology is being sold to, namely branches of the U.S. government like the Department of Defense and Immigration and Customs Enforcement. Workers from Google, Microsoft, and now Amazon have signed petitions and quit in protest of the government work. It’s had some impact: Google released AI ethics principles and publicly affirmed it would not renew its contract with the Department of Defense in 2019. Microsoft told employees in an email that it wasn’t providing AI services to ICE, though that contradicts earlier descriptions of the contract on the company’s website, according to Gizmodo.

This debate, playing out in a very public manner, marks a major shift in how tech companies and their employees talk about artificial intelligence. Until now, everyone has been preaching the Gospel of Good AI: Microsoft CEO Satya Nadella has called it the most transformational technology of a generation, and Google CEO Sundar Pichai has gone even further, saying AI will have comparable impact to fire and electricity

That impact goes well beyond ad targeting and tagging photos on Facebook, whether researchers like it or not, says Gregory C. Allen, an adjunct fellow at the Center for New American Security, who co-authored a report called “Artificial intelligence and national security” last year. While we’re collectively OK with AI being used to spot celebrities at the royal wedding, some of us have second thoughts when it comes to picking out protestors in a crowd.

“There’s no opt-out choice, where I, as an AI researcher, say that there will never be national security implications of AI. Thomas Edison was not in a position to say that there will never be national security implications of electricity,” Allen told Quartz. “That’s an inherent property of useful technologies, that they tend to be useful for military applications.”

AI like facial recognition is what’s called a dual-use technology, meaning its implementation can have positive or negative impact depending on how it’s used. Researchers from OpenAI and the Future of Humanity Institute, as well as Allen at CNAS, grappled with this distinction in a February 2018 report laying out the potential dangers of AI.

“Surveillance tools can be used to catch terrorists or oppress ordinary citizens. Information content filters could be used to bury fake news or manipulate public opinion. Governments and powerful private actors will have access to many of these AI tools and could use them for public good or harm,” the report says.

Researchers have been trying to teach machines to recognize human faces for decades. The first work on the technology can be traced back to the 1960s, but modern facial recognition has flourished since the September 11 attacks in the United States, due to increased funding for national security, according to the Boston Globe.

To spur further advancements, tech companies have published their research and spread code on the open internet, a boon to researchers around the world. But that practice also means others like defense contractors have access. The rise of Palantir as a government contractoris predicated on its ability to analyze data with machine learning algorithms, and companies like NEC have licensed facial recognition technology from academics to expressly sell it to law enforcement.

While Google, Microsoft, and Amazon are likely the most capable to provide large-scale computing to government entities, they’re far from the only ones. But no matter who implements the technology, the public can push for transparency and pressure for ethical guidelines to be set in place.