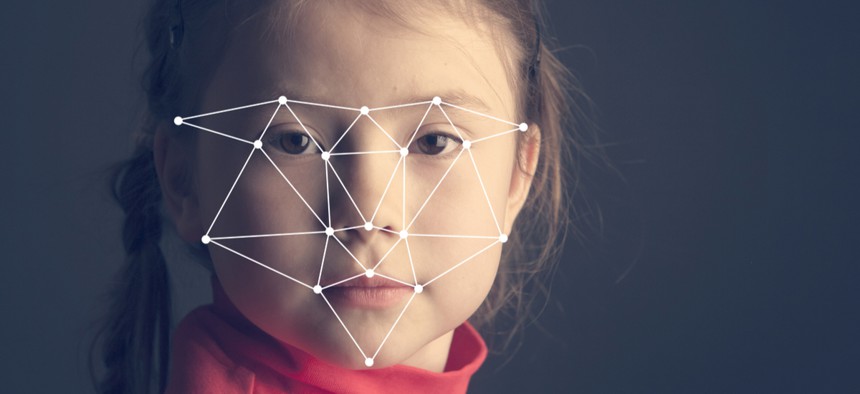

The Tenants Fighting Back Against Facial Recognition Technology

Anton Watman/Shutterstock.com

The landlord of a rent-stabilized apartment in Brooklyn wants to install a facial recognition security system, sparking a debate about privacy and surveillance.

Last year, residents of Atlantic Plaza Towers, a rent-stabilized apartment building in Brooklyn, found out that their landlord was planning to replace the key fob entry system with facial recognition technology. The goal, ostensibly, was to modernize the building’s security system.

But some residents were immediately alarmed by the prospect: They felt the landlord’s promise of added security was murky at best, and didn’t outweigh their concerns about having to surrender sensitive biometric information to enter their own homes. Last week, lawyers representing 134 concerned residents of the building filed an objectionwith the state housing regulator. It is the first visible opposition in New York City to the deployment of such technology in the residential realm.

“We’re calling ourselves the Rosa Parks of facial recognition in residential buildings,” said Icemae Downes, a resident who has lived in the building since 1968 and was part of a residents’ protest last week. “We’re standing up to say, ‘No, we don’t feel this is right.’”

Before making any big changes, landlords overseeing rent-stabilized apartments in New York have to put in an application with the state’s Homes and Community Renewal (HCR) agency. After Nelson Management Group, which runs Atlantic Plaza Towers, started that process last year, some clients formally registered their reservations. Last week’s filing consolidated the objections of 134 residents from the 700-unit complex, arguing that installing the scanner would violate the terms of their lease agreement. The lawyers are now demanding a hearing on the matter.

“The landlord has made it clear that tenants will not be permitted to opt out,” said Samar Katnani, an attorney at Brooklyn Legal Services’ Tenant Rights Coalition, who is representing the residents. “So there is no concept of consent in this—really, it’s the tenants having no agency over this unique information.”

Nelson Management told Gothamist that the technology is intended to boost security, and that it is has “engaged a leading provider of security technology for proposed upgrades, which has assured ownership that data collected is never exposed to third parties and is fully encrypted.”

The building complex already has a security guard and its common areas are wired with several security cameras. And the residents say they already feel heavily watched. Last month, the New York Times reported that when some of them gathered in the lobby to discuss the facial recognition system, building management sent them a notice with pictures taken from a security camera and a warning that the lobby was not “a place to solicit, electioneer, hang out or loiter.” (Landlords, however, don’t have the authority to ban peaceful assembly in this way.)

“How much more surveillance do we need,” said Downes, who is a longtime tenant organizer. She worries that the technology will have a chilling effect on not just the residents, but anyone who visits them. “We’re saying we don’t want this; we’ve had enough. We should not feel like we’re in a prison to enter into our homes.”

Facial recognition technology has proliferated dramatically in the last few years, but the rules surrounding its usage haven’t really caught up. That’s particularly concerning given the research out of MITdetailing how inaccurate it can be for people of color and women: One analysis found that facial recognition software had an error rate of 0.8 percent for white men, and 34.7 percent for black women.

Even if the technology becomes more accurate, its dominance over the public realm and potential impact on vulnerable residents have long worried privacy and civil liberties advocates. Historically, poor and marginalized communities—and by extension the spaces in which they live—have been disproportionate targets of state surveillance, and new technologies and techniques are often first used on such individuals before they are rolled out more widely, advocates say. (See, for example, the Chinese surveillance on the Uyighur Muslim community.) These communities are often exposed to unique privacy risks if the database in which their biometric information is stored is breached or hacked.

In 2016, Georgetown Law’s Center on Privacy and Technology found that the use of facial recognition by federal, state, and local law enforcement affects 117 million people in the U.S. “A few agencies have instituted meaningful protections to prevent the misuse of the technology,” the report reads. “In many more cases, it is out of control.” (The report suggests model legislation that might help regulate the conditions in which the technology may be used by law enforcement.)

In San Francisco and Oakland, privacy advocates have been pushing legislation forbidding city agencies from using facial recognition technology. If the legislation passes, they would be the first cities to take such a step. “It’ll be too dramatic of a power shift between the government and the governed,” said Brian Hofer, chair of Oakland’s Privacy Advisory Commission and one of the chief architects of the ordinance, about the mission creep. “We’ll be tracked the minute we step out of our home, and lose the ability to be anonymous in public.”

The use of this technology in public and subsidized housing appears to be spreading: In Detroit, a controversial surveillance program that recently integrated facial recognition may be coming to public housing. Since 2010, activists in Los Angeles have been fighting against the police department’s push to outfit public housing projects with security cameras, and use facial recognition technology to match the captured faces to pictures in gang databases. (Those databases have been criticized as being overly broad, inaccurate, and opaque.) Even private developers are jumping on the bandwagon. In New York, affordable housing developer Omni New York boasted it would feature “a state of the art facial recognition system at the front entrance” of one of its Bronx developments for low-income families and former homeless veterans.

To allay privacy concerns, the company providing the technology to Atlantic Plaza, Stonelock, maintains that its system works differently from other commercial facial recognition products—it collects only a small percentage of the visual information it scans. The company also does not have any access to the captured data and does not share it with third parties, a spokesperson said. The customer—in this case, the landlord—controls the information captured by the scanner, which takes photos up to three feet away.

That’s still a problem, Downes said, because the tenants have little say in the matter and no avenue for redress if something goes awry. It exacerbates an already-imbalanced power dynamic between the landlord and the residents, most of whom are people of color and female. “Why should a private person be able to say that I own this property and I will do what I want to do to my tenants?” she said. “We’re saying that’s a slave mentality and we don’t like it.”

Housing complexes of low-income residents may be one early testing ground for residential applications of facial recognition technology. But they’re not the only ones. Amazon’s doorbell company, Ring, is coming out with a video doorbell that incorporates facial recognition, which has the ACLU worried about the risk of high-tech profiling of “suspicious” persons.

In the case of Atlantic Plaza Towers, it’s not clear when the state agency will make a final decision on the new system. For Downes, the matter needs to be decided by state legislators who can draft proper guidelines around the usage of such technology, the consequences of which are not well-understood.

“Their residential system has not been tested and we do not want to be their test case,” she said.