A new government digital services playbook

Steve Kelman applauds another effort to share knowledge across the contractor community.

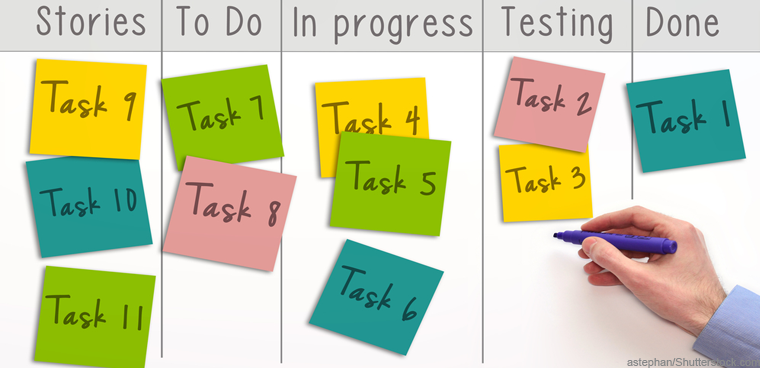

In 2014, one of the first arrivals on the scene after creation of the new U.S. Digital Service was the U.S. Digital Services Playbook, with 13 "plays" to guide government in successful execution of digital projects.

Not coincidentally, 2014 was also the year a new government IT contractor called Ad Hoc was founded by some people who had worked on the rescue of Healthcare.gov -- the 2013 disastrous launch of which was a catalyst for the establishment of the USDS. Starting with four employees, Ad Hoc today employs 120.

And now, about four years later, the firm has published its own Ad Hoc Government Digital Services Playbook. Authored by Kaitlin Devine and Paul Smith and directed at government managers of digital services projects, the playbook aims to share what the Ad Hoc team has learned from four years doing digital projects. It ties to the 13 original plays in the USDS Playbook and follows its organization into principles, checklists and questions.

"We want to share our knowledge in hopes that other teams can continue to build on the progress we and many other organizations are making in improving government digital services," Devine and Smith write.

With this sharing of knowledge, Ad Hoc is going in the footsteps of the Digital Services Coalition, a support group of non-traditional IT contractors, about which I blogged a while ago. As Agile 6 CEO Robert Rasmussen, who founded the Digital Services Coalition, put it, new firms in the government IT space should aim to cooperate more than compete, and to create an environment where "other member firms have your back."

Rather than trying an exhaustive summary of the points in the Ad Hoc playbook, I have chosen some quotes that make points that would be useful to actual government managers working on such projects:

- Successful use of commercial practices in the government requires more than simple transfer of the Silicon Valley to federal agencies. "Government is a unique client because it must serve everyone, is accountable to the public (versus the market), and is constrained by legislation and rule-making. Government digital service delivery blends the best practices of modern, consumer-facing software with the security, stability, and accountability that government services require."

- "User-centrism is at the core of government digital services. It distinguishes them from enterprise software, where users are expected to have substantial training and domain knowledge, or conform to confusing business-processes-as-software. While government had substantial experience building enterprise software systems prior to 2013, when HealthCare.gov launched, it didn't have comparable experience delivering digital services, such as those users have become accustomed to in the commercial sector. The challenge of the past four years has been introducing to government the practices and processes that set user-centered services up for success."

- "It's wrong to start with an organization-centric approach, modeling the service around internal data, structures, and processes. This tends to lead to services that are confusing to users, and has little or no emphasis on the quality of their experience. It is important to not burden the user with understanding an agency's internal structure, processes, or bureaucracy to achieve a goal."

- "The kinds of teams and systems architectures it takes to deliver digital services do not resemble that of traditional enterprise software. Exposing enterprise software to the general public as digital services, or using enterprise software delivery practices to create digital services, has been a recipe for failed technology projects.

A follow-up question to ask from this section of the report is, "Do our users need to know how our organization is structured to use our product?" (Note, however, that this reflects a dilemma that long predates digital government: It is a version of the old advice to adapt agency processes to software capabilities rather than vice versa -- but agencies often resist doing so.)

- Start with serious user research -- again, not a new thought, but for years honored far more in the breach than the practice. "What if you find out in your initial research that 85% of your users access your service on mobile? You've learned that you should build a mobile experience first, instead of finding that out at the end of the development cycle when it's much more costly to change course. You can also learn a lot by interviewing stakeholders, especially those in support functions, like a help desk. You can prioritize product features based on help desk requests, allowing the help desk to spend more time on users with more difficult problems." (This is very useful, highly practical advice, and I've never seen it offered before.)

"This can also bring down the overall cost of the call center. What you learn from these efforts pays for itself later in cost avoidance. If you built your product before researching the problem, you now have to spend a lot of extra time and money to go back and retrofit your product. Not only could this have been avoided, but now you have a mobile experience that feels ‘bolted on' and not baked into the product. Conducting initial discovery research helps you optimize what you're building for the greatest number of users."

Questions the playbook asks at the end of this discussion include: "Are we providing the time and space for proper discovery without rushing to development?"

- "Successful digital service delivery teams tend to be cross-functional and fully vertically integrated, from user research and design all the way through development and operations. They take a product management approach to delivery, which means owning and being responsible for the entire user experience."

- "When systems are overbuilt, or built incorrectly for the problem, they must be scaled up and out of proportion to work effectively. Money and effort is wasted, and the user experience suffers. We have also observed this in the form of complicated and hard-to-use interfaces. Most of the development time and effort on digital services is spent on accommodating edge-cases and business rules that impact a small percentage of users. … Government must serve everyone, so what can be done? One approach may be to build ‘super-user' interfaces alongside the main digital service, following the classic 80/20 rule."

- "Overly complicated or complex architectures and user interfaces have doomed major projects. Experience has taught that most of these failed services were unnecessarily complicated, and needed to be radically simplified to succeed. This is especially important in high-traffic, high-demand consumer-facing digital services that must serve requests quickly and efficiently.

- "The technical architecture of the service constrains the efficiency of a transaction in the service. …One way to succeed is by choosing proven, commodity software for your technology stack, especially for core components such as databases and application servers. Use technologies that are familiar to the broader web development world, and architect them in familiar configurations. This will lead to simpler, more efficient implementations that are easier to troubleshoot. Free your team to focus on optimizing the user experience, instead of battling with core infrastructure."

In the checklist for this section of the playbook, they list using "boring technologies, especially in critical core components such as databases, limiting use of more innovative tech to well-defined experiments where effects of failure can be contained."

- "Never roll out a new service by abruptly flipping a switch and redirecting all traffic to the new system. Tooling that allows you to switch just certain demographics or types of users to a new system is widely available. Offer an alpha or beta version that users opt-in to trying out. Or, direct traffic for just a small section of the application to the new system. … Because users take time to acclimate to a new system, allow them to revert back to the legacy application for a period of time after launch. With this approach, you can also gather analytics on gaps in your information architecture that cause users to revert back to the old version to find something."

- "When building new functionality around legacy components, we have taken steps to ensure the new functions gracefully degrade, and don't overwhelm the legacy system. … We have a plan to, over time, decompose a monolithic legacy system into a set of smaller, more focused microservices, centered around the user's needs."

- The playbook even has something about my favorite area, contracting. "Structure your contracts as a six month base period with several six month option periods. This allows you to conduct your discovery and prototyping in the base period, and make a more informed decision to either execute an option to continue with delivery, or pivot based on your discovery and prototyping work."

The option periods should also be used as an opportunity to consider re-opening the contract to competition, which "maintains competitive pressure on the vendor to keep up the quality and speed of delivery. …Make sure to keep your statement of work up to date with the newest information from your project. This will ensure you feel prepared to re-compete the contract instead of exercising an option if it becomes necessary."

(Again, keeping potential competition open beyond initial award is a point that has been made before; being able to execute may depend on streamlined competition and use of API's for interfaces between the work of successive contractors.)

- "Policy and subject matter experts should sit down and collaborate with technologists, not just in the initial conception and ideation phase, but in all phases of the process, through research, delivery sprints, and iterations on design and new feature development. Good engineers can suggest tradeoffs, alternate implementation paths, and new ideas based on their familiarity with the capabilities of the technology."

One of the questions this section asks is "how often do SMEs attend sprint reviews, or listen in on usability test sessions to see the product in action?"

- "In our experience, the choice between ‘custom software development' and ‘customized COTS' is a false one. Very little is custom-built from scratch anymore: any effective engineering effort will use lots of pre-existing software, from operating systems and databases to libraries and frameworks. COTS products may promise total solutions, but they will inevitably need to be pared-down and customized. Everything has trade-offs. The question is whether you will examine the trade-offs explicitly or not. …Default to building small, focused custom applications built on commodity infrastructure and open source stacks."

- "It makes no sense to graft legacy data center management practices on top of the cloud, for example, manually modifying infrastructure, applying regressive network rules, and extended downtime from ‘maintenance windows.' … Similarly, formal change control boards and processes, while well-meaning and sensible in more static, legacy data center environments where changes can be very risky and expensive, should be reevaluated when deploying to the cloud."

One of the things I like about this playbook is that it was written at all. It is easy, and tempting, as a vendor to regard the insights discussed here as the company's intellectual property and want to keep them secret from potential competitors. Ad Hoc, like Agile 6's Rasmussen, is doing the opposite. We should all be grateful for this.

There's much more in this playbook than I have room for in this somewhat random summary. Read it! And Ad Hoc team, thank you.