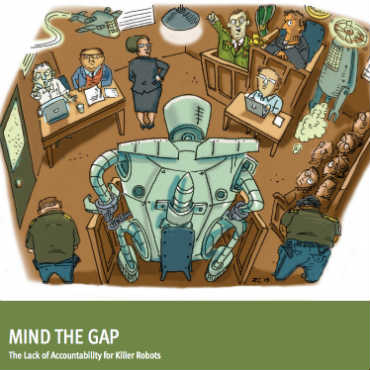

Who's responsible for the autonomous killer robots?

A new report explores the legal, moral and practical concerns of fully autonomous weapons.

What: A report from Human Rights Watch and Harvard Law School's International Human Rights Clinic on the legal, moral and practical concerns regarding fully autonomous weapons.

Why: The report's subtitle, "The lack of accountability for killer robots," suggests science fiction, but the authors argue that "technology is moving in [that] direction, and precursors are already in use or development" -- pointing to automated defense systems like Israel's Iron Dome and the U.S. Phalanx and C-RAM.

Fully autonomous weapons, however, are all but incapable of distinguishing between lawful and unlawful targets as required by international humanitarian law. It is unlikely that either commanders overseeing the weapons or the programmers and manufacturers who developed them could be held legally liable if an autonomous system illegally killed noncombatants. And then there's the concern of an arms race that could put such weapons in the hands of those with little regard for the law.

Granted, not many IT professionals will be managing battlebots anytime soon. And as the report makes clear, the author's concerns of civil accountability would be all but moot for U.S. military personnel and contractors thanks to existing immunity provisions. But the questions are interesting ones, and have broader relevance as systems of all sorts grow increasingly autonomous.

Verbatim: "Existing mechanisms for legal accountability are ill suited and inadequate to address the unlawful harms fully autonomous weapons might cause. These weapons have the potential to commit criminal acts—unlawful acts that would constitute a crime if done with intent—for which no one could be held responsible."

Full report: Read the whole thing here.