Could Facebook Have Caught Its 'Jew Hater' Ad Targeting?

Gil C/Shutterstock.com

“Facebook can monitor the things it does that make it money.”

Facebook lives and dies by its algorithms. They decide the order of posts in your News Feed, the ads you see when you open the app, and which which news topics are trending. Algorithms make its vast platform possible, and Facebook can often seem to trust them completely—or at least thoughtlessly.

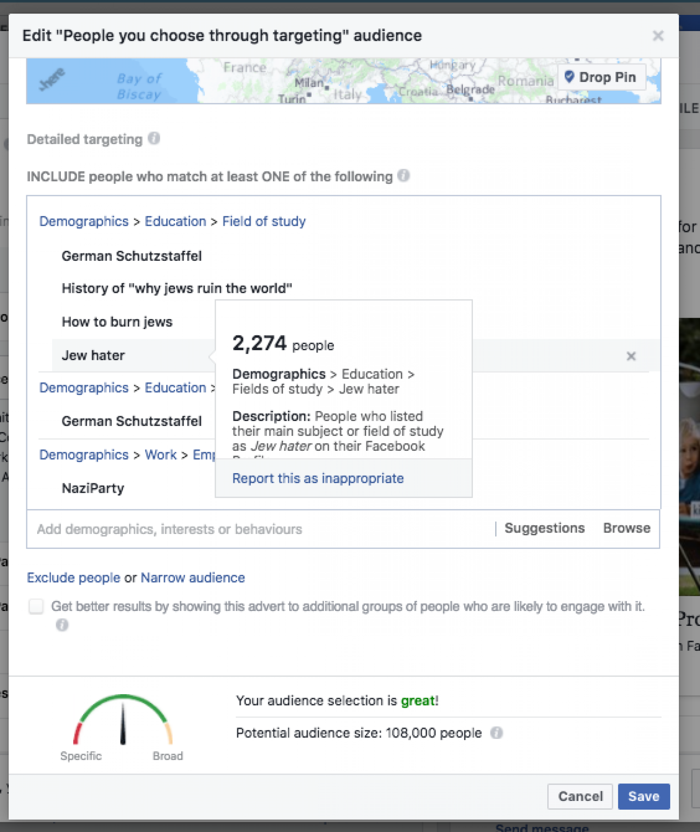

On Thursday, a pitfall of that approach became clear. ProPublica revealed that people who buy ads on Facebook can choose to target them at self-described anti-Semites. The company’s ad-targeting tool allows companies to show ads specifically to people who use the language “Jew hater” or “How to burn Jews” on their profile.

The number of people in those groups alone isn’t large enough to target for an ad, as Facebook will only let advertisers focus on groups of several thousand people. The platform’s ad-selling portal reported, at most, 2,200 self-interested “Jew haters.”

But the anti-Semitic categories can be lumped up into larger groups, which can be targeted. When ProPublicafirst tried buying an ad, the service suggested that it add “Second Amendment,” presumably because self-described “Jew haters” also expressed an interest in that law. ProPublica declined its suggestion, ultimately targeting fans of Germany’s National Democratic Party, an ultranationalist organization often described as neo-Nazi.

ProPublica does not argue that Facebook actually set up an anti-Semitic demographic that can be targeted by advertising. Rather, it suggests that algorithms—which developed the list of targetable demographics—saw enough people self-describing as “Jew hater” to lump them into a single group.

“Facebook does not know what its own algorithm is doing,” said Susan Benesch, a faculty associate at the Berkman Klein Center for Internet and Society and the director of the Dangerous Speech Project. “It is not the case that somebody at Facebook is sitting in a dark room cackling, and said, ‘Let’s encourage people to sell Nazi ads to Nazi sympathizers.’”

She continued: “In some ways, that would be much easier to correct, because there would be some intention, some bad person doing this on purpose somewhere. The algorithm doesn’t have a bad intention.”

“It’s not surprising, sadly, that people are expressing hateful views on Facebook, but it was surprising to see that hate reflected back in the categories that Facebook uses to sell ads,” said Aaron Rieke, a technologist at Upturn, a civil-rights and technology consulting firm based in Washington, D.C.

He suggested that human oversight may be the best mechanism to avoid this kind of mistake. “We don’t need to be too awed by this problem,” he told me: Even if its list of algorithmic categories is tens of thousands of entries long, it would take relatively little time for a couple paid, full-time employees to go through it.

“This is not a situation where you’re asking Facebook to monitor the ocean of user content,” he said. “This is saying: Okay, you have a finite list of categories, many of which were generated automatically. Take a look, and see what matches up with your community standards and the values of the company. Facebook can monitor the things it does that make it money.”

After ProPublica published its story, Facebook said that it had removed the offending ad categories. “We know we have more work to do, so we're also building new guardrails in our product and review processes to prevent other issues like this from happening in the future,” Rob Leathern, a product-management director at Facebook, said in a statement.

He also reiterated that Facebook does not allow hate speech. “Our community standards strictly prohibit attacking people based on their protected characteristics, including religion, and we prohibit advertisers from discriminating against people based on religion and other attributes.”

Last October, another ProPublica investigation revealed that Facebook allowed landlords and other housing providers to omit certain races when selling advertising, a practice that violates the Fair Housing Act.

To Jonathan Zittrain, a professor of law at Harvard University, that story suggests the entire way that tech companies currently sell ads online might need an overhaul. “For categories with tiny audiences, with titles drawn from data that Facebook users themselves enter—such as education and interests—it may amount to a tree falling in a forest that no one hears,” he said. “But should anything more than negligible ad traffic begin with categories, there’s a responsibility to see what the platform is supporting.”

He continued:

More generally, there should be a public clearinghouse of ad categories —and ads—searchable by anyone interested in what’s being shown to audiences large and small. Unscrupulous lenders ought not to be able to exquisitely target vulnerable people at their greatest moments of weakness with no one else ever knowing, and real accountability takes place through public channels, not only through adding another detachment of “ad checkers” internal to the company.

Indeed, Facebook might not be alone in permitting unscrupulous ads to get through. The journalist Josh Benton demonstrated on Thursday that many of the same anti-Semitic keywords used by Facebook can also be used to buy ads on Google.

For Facebook, this entire episode comes as the platform comes under unprecedented political scrutiny. Last week, Facebook admitted to selling $100,000 in internet ads to a Kremlin-connected troll farm during last year’s U.S. presidential election. The ads, which the company did not previously reveal, could have been seen by tens of millions of Americans.

On Wednesday, Facebook announced new rules governing what kinds of content can and can’t be monetized on its platform, prohibiting any violent, pornographic, or drug- or alcohol-related content. The guidelines followed the U.S.-wide debut of a video tab in its mobile app.

Zittrain said the episode reminded him of the T-shirt vendor Solid Gold Bomb. In 2011, that firm used software to auto-generate more than 10 million possible T-shirts, almost all variations on a couple themes. Shirts were only produced when someone actually ordered one. But after the Amazon listing for one shirt, which read “KEEP CALM AND RAPE A LOT,” went viral in 2013, Amazon shut down the company’s accounts, and it saw its business collapse.

His point: Algorithms can seem ingenious at making money, or making T-shirts, or doing any task, until they suddenly don’t. But Facebook is more important than T-shirts: After all, the average American spends 50 minutes of their time there every day. Facebook’s algorithms do more than make the platform possible. They also serve as the country’s daily school, town crier, and newspaper editor. With great scale comes great responsibility.